Virtualizor now supports High Availability for KVM Virtualization.

For using it on Proxmox + Virtualizor setup, you can view this guide :

https://www.virtualizor.com/docs/install/install-proxmox/#ha-live-mirgration

Requirements

- Fresh server with OS : CentOS 7.x or AlmaLinux 8.x or Ubuntu 20.04 / 22.04

- yum/apt

- Shared Storage to create the VPS disks.

(Permissions qemu:qemu CentOS/AlmaLinux hosts and libvirt-qemu:kvm for Ubuntu hosts) - Shared mount point for KVM XML configuration files at /etc/libvirt on your KVM nodes.

(Existing data under that directory needs to be saved somewhere temporarily so that it can be restored upon mounting your shared directory on /etc/libvirt ) - At least four nodes to create HA cluster with Virtualizor KVM (to get reliable quorum) includes Virtualizor master.

- Shared IPPool among the HA Server group, so that the same IP can work on the other server where VM will get migrated on failure. Domain forwarding option will not work in this case.

- Since Version 2.9.9+

Installation

- Login to the Virtualizor Master with the servers root details

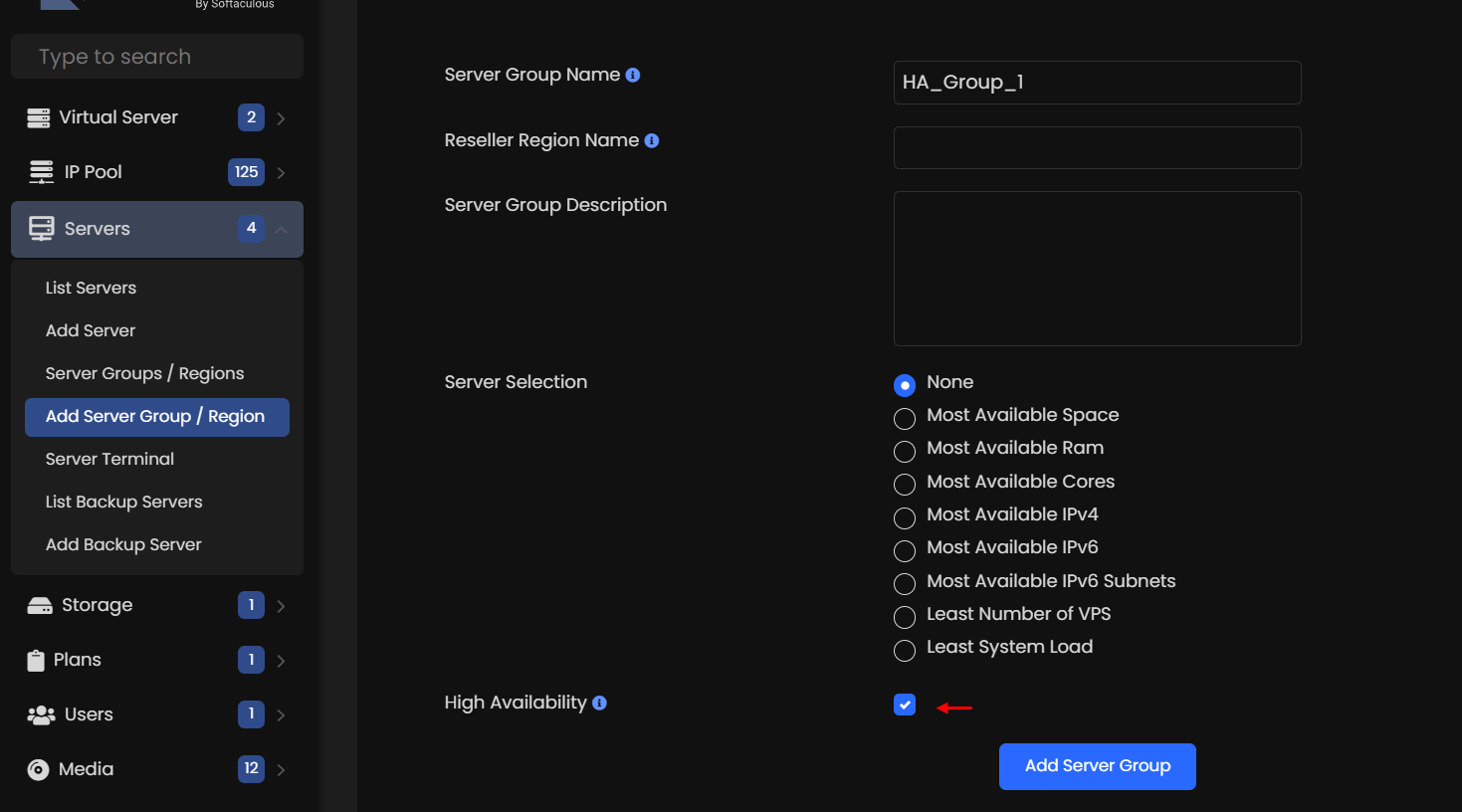

- Click on Servers ->Add Server Groups and check the High Availability checkbox to enable High Availability for the Server Group.

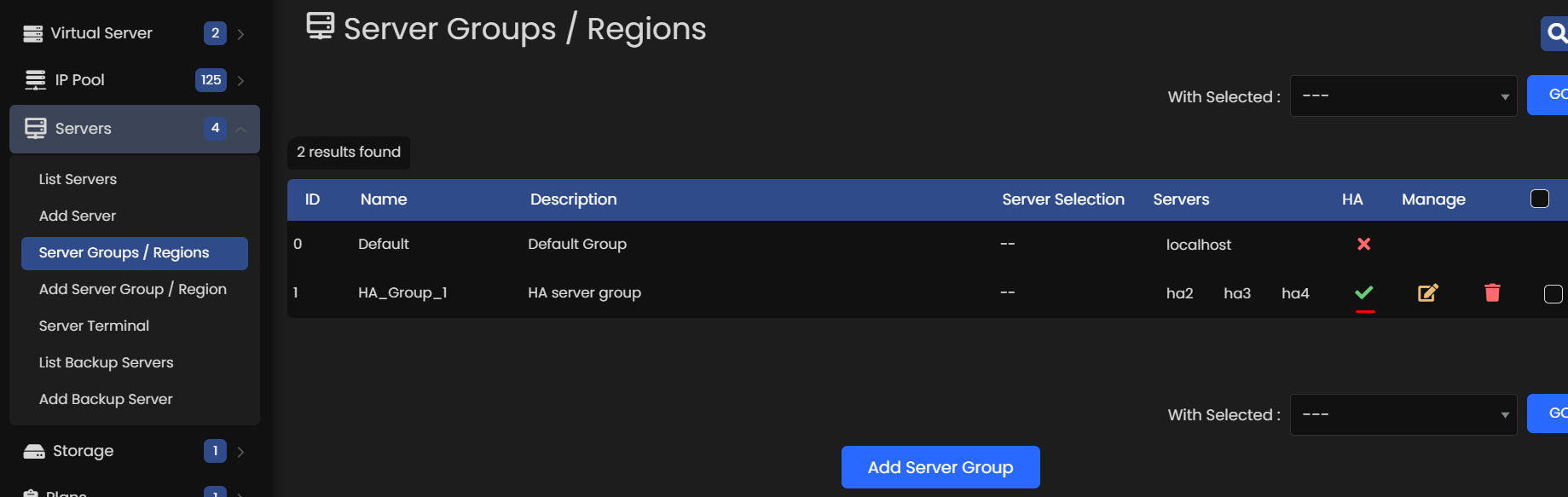

Check if Server Group has HA enabled.

- Click on Servers -> Server Groups/Regions

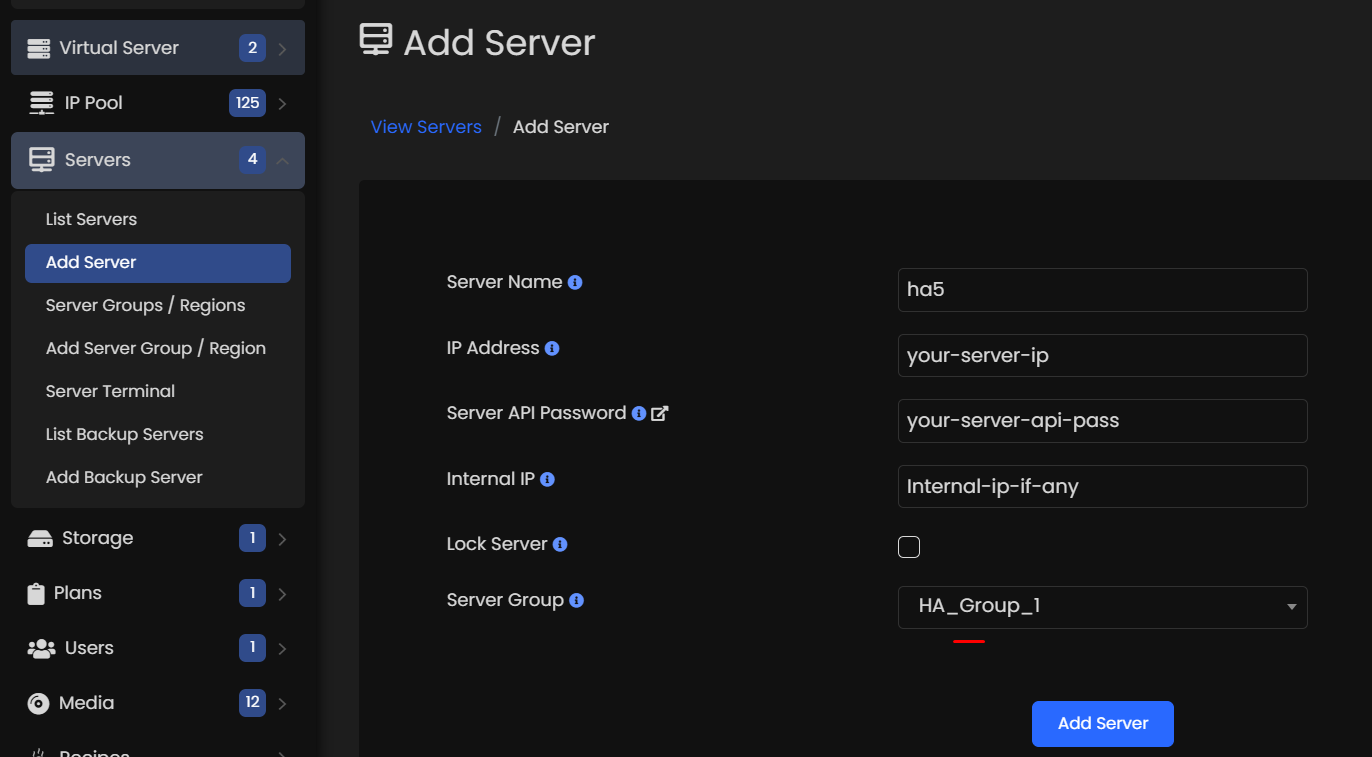

Once you have entered all the information for adding the new server with HA enabled server group, click on Add Server.

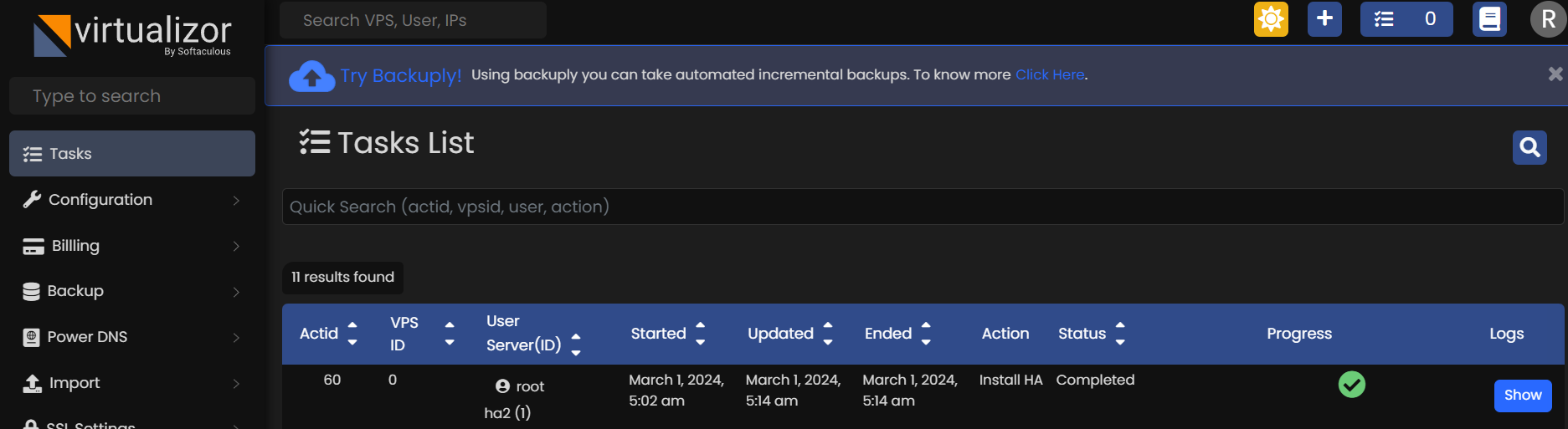

You can check the installation process on task wizard.

Create VPS with HA Enabled

If the server has HA enabled, VM will be automatically create with HA enabled.

NOTE: Above option (High Availability) will be shown if the selected server is under HA enabled server group.

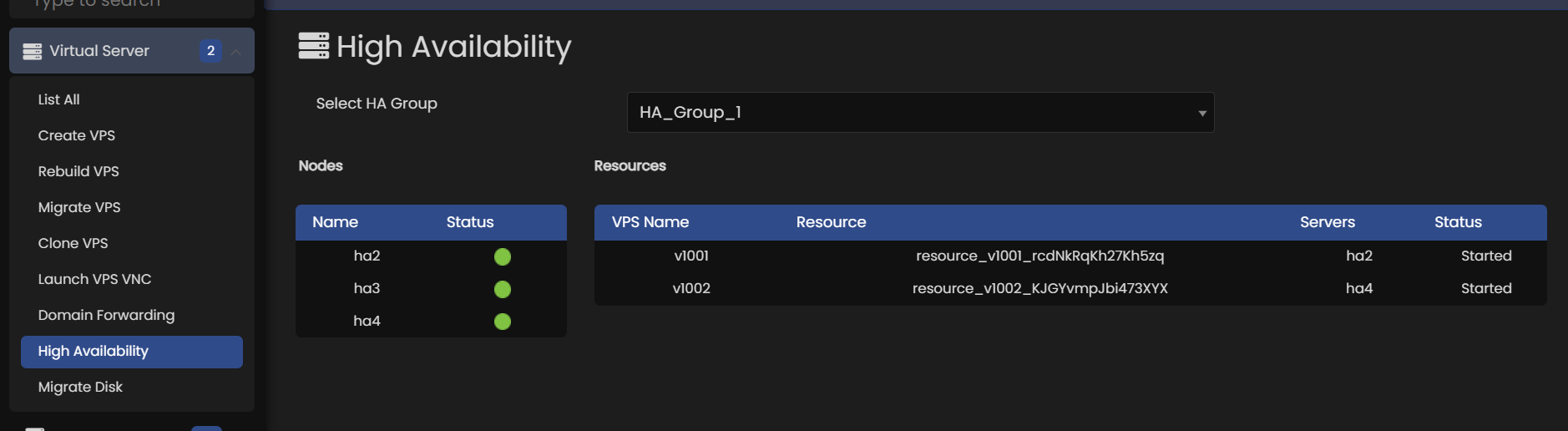

Monitor HA Cluster(s)

Once you have created/added Server with HA enabled, you can monitor the resource created on those HA cluster.

To check resource and node go to Admin Panel -> Virtual Servers -> High Availability

You can see that the status of the v1001 resource is Started on a particular node (in this example, ha2 ).

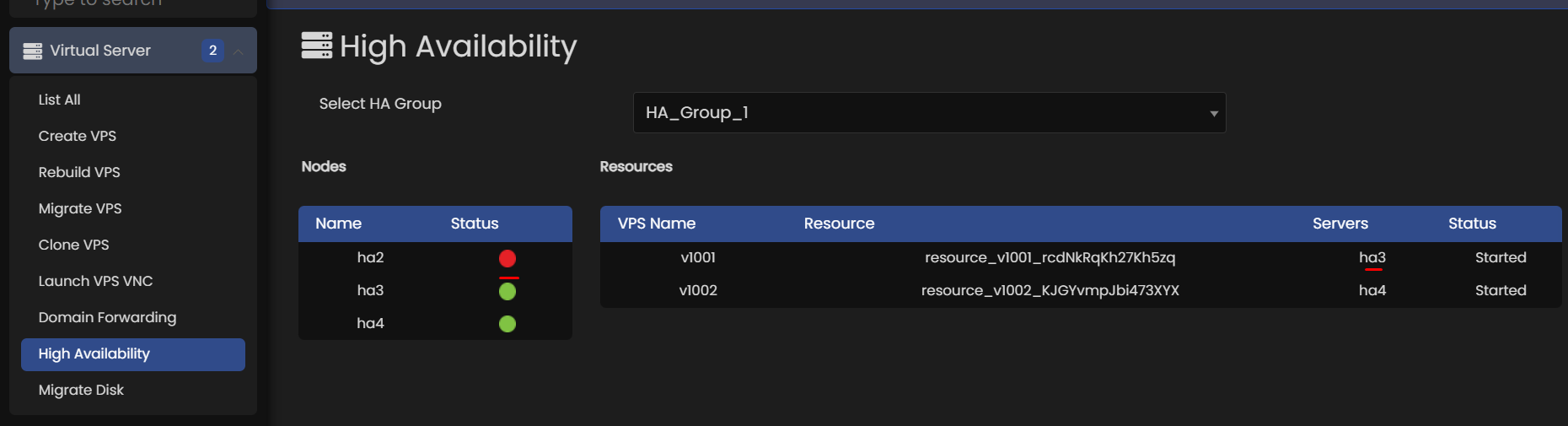

Shut down Pacemaker and Corosync on that machine to trigger a failover :

A cluster command such as pcs cluster stop nodename can be run from any node in the cluster, not just the affected node.

Verify that pacemaker and corosync are no longer running on ha2 server :

Go to the other node, and check the cluster status :

Notice that v1001 is now running on ha3.

Failover happened automatically and no errors are reported.

You can even view it on Admin panel->Virtual Servers->High Availability

Use corosync-cfgtool to check whether cluster communication is active .

pcs status command should always show partition with quorum and also no stonith related errors should be shown to avoid any issues with working of high availability .

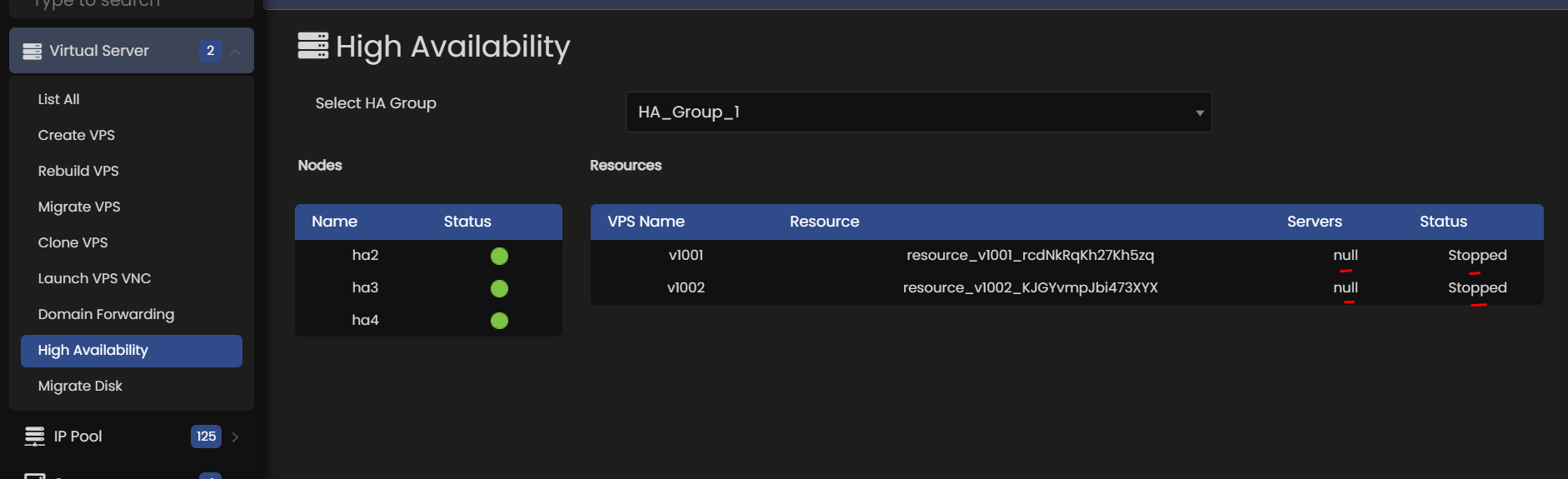

Null resource

HA attemps to start a resource (VPS) and it fails multiple times then it will set the resource failcount to INFINITY :

Failcounts for resource 'resource_v1002_KJGYvmpJbi473XYX'

ha2 : INFINITY

ha3 : INFINITY

ha4 : INFINITY

And it would show up as null resource :

In-order to start the resource in HA, it will require clean-up of the resource :

Then it should attempt to start the resource and it would appear as active and the nodes would get listed for those VPSes instead of Null.