Task not updated since 30 minutes

Check for the following steps :

- Login to both Master and Slave (Source server where the migration will be started).

- After that login in to both the server's phpmyadmin (Select this option from the Virtualizor panel : Configuration > phpmyadmin.)

- On the Master side, select virtualizor DB, execute the following query :

select max(slaveactid) from tasks where serid=SLAVEID

SLAVEID will be the slave servers id (Source server). - Now on the Slave server, go to virtualizor DB and check tasks tables entries.

Execute the following query on Slave server :

select max(actid) from tasks

If it has lesser entries than the count which you found on Master server, then change the Auto Increment value to a greater one.

Start the migration process again, it should solve the problem.

Permanent fix: Increase the value for this option from the Virtualizor panel : Configuration > Master settings > Logs Settings > Tasks Retention.

SSH connection failed

If you see this Error : ”SSH connection failed to destination server (xxxx) on port (xxx) from source server (xxxx) . If port used is incorrect, you can change it in Slave setting of the Destination server”

- Set correct port number and SSH login attempts on Master panel->Configuration->Slave settings (choose the destination server)

- Logs can be checked in /var/log/secure of the destination node. (Can be due to directories permission issue for keys)

- Try password-less login from Source server to Destination if saving slave settings does not helps :

ssh -i /var/virtualizor/ssh-keys/id_rsa -q -vv -p dest-port root@destinationIP - If it still fails then check PubkeyAuthentication in destination's ssh config is not disabled.

Also path in /etc/ssh/sshd_config it should be :

AuthorizedKeysFile .ssh/authorized_keys

And not :

AuthorizedKeysFile .ssh/authorized_keys2 (may cause issues) - If the Source server is CentOS 6 or 7 then it will give issues in connecting to servers with latest OS.

If it gives anything like 'no hostkey alg' then it will require updating crypto policies on destination node.

To view current key setup on the destination server :

update-crypto-policies --show

If it shows Default then it will require changing to legacy

To accept older/weaker ciphers change it to legacy :

update-crypto-policies --set LEGACY

And then change it to default once migration/cloning is done :

update-crypto-policies --set DEFAULT - If nothing works then you can delete keys from Master database table : servers_sshkeys (table in Virtualizor database) and /var/virtualizor/ssh-keys/id_rsa from Source server then re-try migration from panel.

Could not make the TAR of the Source VPS

Disk IO error

One of the reasons for above error message is that the disk on the server itself is having issues.

You can view similar error in transfer log tab for migration task on tasks page :

dd: reading `/dev/vg/vsv1001-d9axstuprrh64nus-sj9hbyyxt1ayiwtq` : Input/output error

1030616+0 records in

1030616+0 records out

527675392 bytes (528 MB) copied, 70.4802 s, 7.5 MB/s

46M in 2.000s 186.459m/s -s 512 -w 2 -m 10000.000

throttle: 98M, 18M in 2.031s 74.804m/s -s 512 -w 2 -m 10000.000

.....

throttle: 204M, 11M in 23.448s 3.884m/s -s 512 -w 2 -m 10000.000

1030616+0 records in

1030616+0 records out

527675392 bytes (528 MB) copied, 70.4049 s, 7.5 MB/s

1 0 0 0 0

1

mig_completedYou can view logs on server under /var/log/ to verify if server's disk is not having any issues.

In this case, you can try enabling rescue mode from panel for that vps so that you can retrieve data if it allows.

Also remove orphan disks from storage->orphaned disks, if any.

But If you see this error message in logs for migration task:

gzip: stdout: Broken pipe

dd: writing to `standard output': Broken pipeThen you will need to verify if password-less login from Source server to Destination server is working fine :

ssh -i /var/virtualizor/ssh-keys/id_rsa -q -p dest-port root@destinationIPOpenvz container

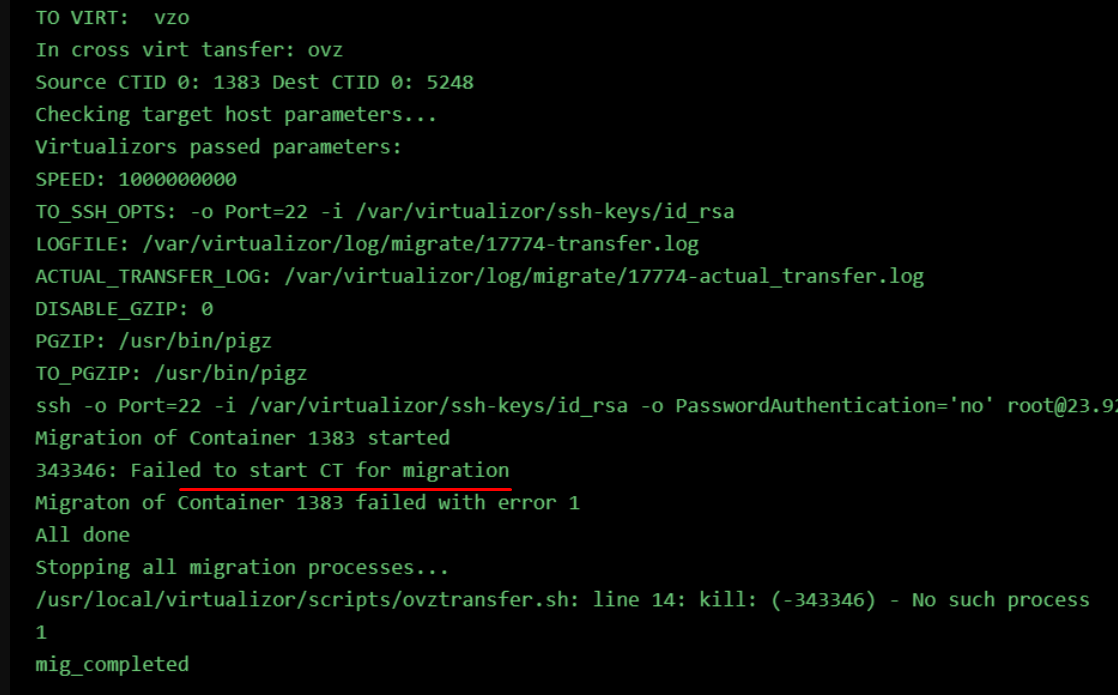

If you are trying to migrate an Openvz container and if it shows error :

Progress: -1: Could not make the TAR of the Source VPS

Where the transfer tab in migration task shows the following error :

Then its more likely failing due to container start being disabled.

If the container was migrated from some other server / control panel then its container could be disabled.

You should see an error similar to this while trying to start the container manually :

Container start disabled

Where XXX is the CTID

In that case, you can turn it off :

After that migration from panel should start working again for that container.

Please download the throttle package and install it using the commands given below :

For 64 bit OS :

Ubuntu And Proxmox

# dpkg -i throttle_1.2-5_amd64.deb